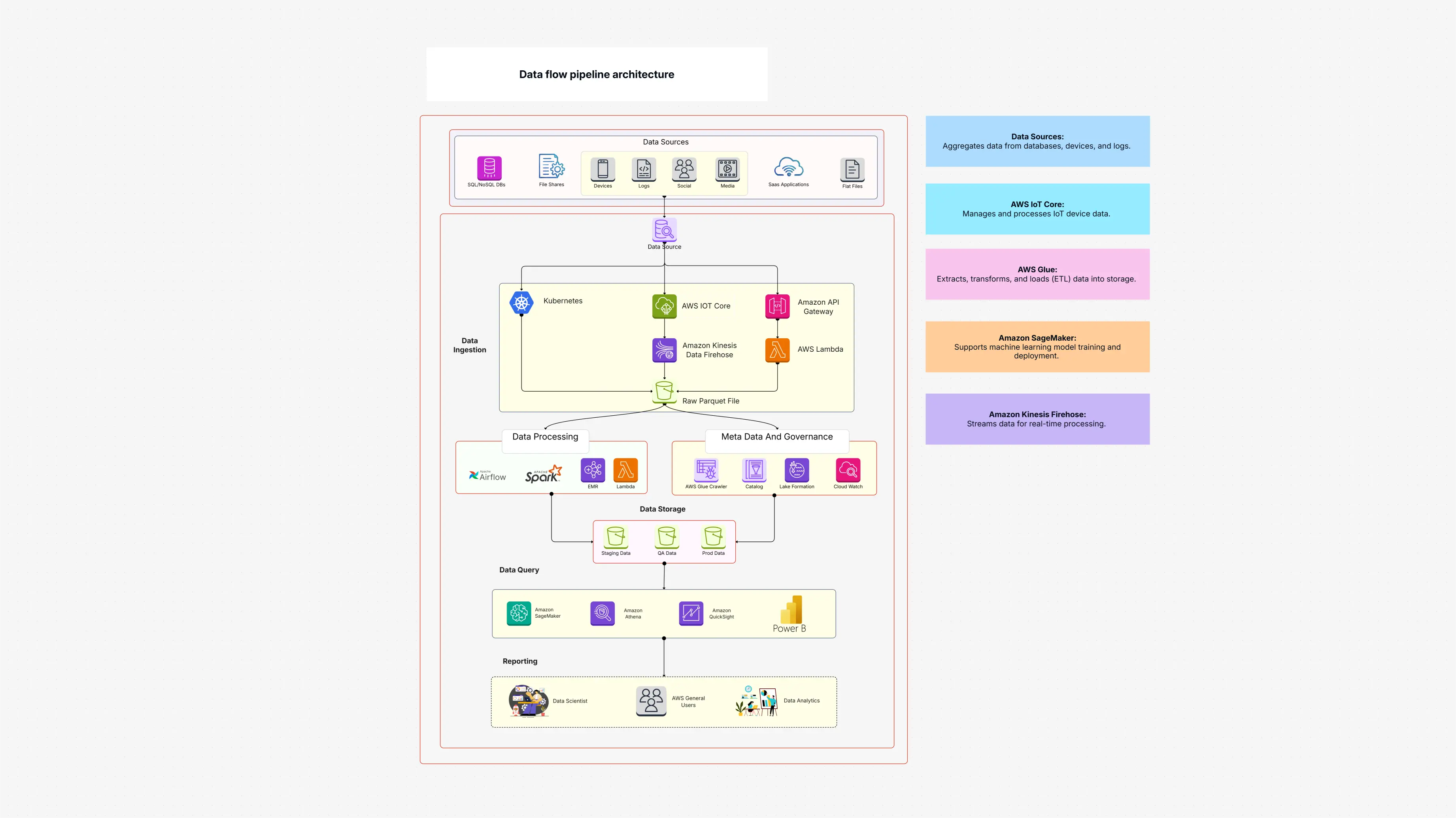

Data Flow Pipeline Architecture Template

Cloudairy

Welcome to Cloudairy

Sign in or sign up

Share

Use Template

Copy Link

Twitter

LinkedIn

Facebook

Manage all your work in one place

Manage all your work in one place